Opening the Black Box

It’s now time to see some machine learning in action. In what follows, a variety of techniques will be used on the same problem to provide a better feel for the sorts of tools that are available.

Process Overview

Despite the facade of a polished product one finds in published research, most of the approach with the statistical analysis of data is full of data preparation, starts and stops, debugging, re-analysis, tweaking and fine-tuning etc. Statistical learning is no different in this sense. Before we begin with explicit examples, it might be best to give a general overview of the path we’ll take.

Data Preparation

As with any typical statistical project, probably most of the time will be spent preparing the data for analysis. Data is never ready to analyze right away, and careful checks must be made in order to ensure the integrity of the information. This would include correcting errors of entry, noting extreme values, possibly imputing missing data and so forth. In addition to these typical activities, we will discuss a couple more things to think about during this initial data examination when engaged in machine learning.

Define Data and Data Partitions

As we have noted previously, ideally we will have enough data to incorporate some validation procedure. This would be some random partition of the data such that we could safely conclude that the data in the test set comes from the same population as the training set. The validation set is used to fit the initial models at various tuning parameter settings, with a ‘best’ model being that which satisfies some criterion. With final model and parameters chosen, generalization error will be assessed with the performance of the chosen model on the test data.

In addition, when data is too large to fit in memory, it will possibly need to be partitioned regardless of the modeling scenario. Spark, Hadoop and other frameworks allow for analysis of data that is potentially distributed across several machines or cores within a machine.

Feature Scaling

Even with standard regression modeling, centering continuous variables (subtracting the mean) is a good idea so that intercepts and zero points are meaningful. Standardizing variables so that they have similar variances or ranges will help some procedures find their maximums/minimums faster. Another common transformation is min-max normalization25, which will transfer a scale to a new one of some chosen minimum and maximum. Note that whatever approach is done, it must be done after any explicit separation of data. So if you have separate training and test sets, they should be scaled separately. Of course, with enough data and appropriate partitioning, it shouldn’t matter.

Feature Engineering

If we’re lucky we’ll have ideas on potential combinations or other transformations of the predictors we have available. For example, in typical social science research there are two-way interactions one is often predisposed to try, or perhaps one can sum multiple items to a single scale score that may be more relevant. Another common technique is to use a dimension reduction scheme such as principal components, but this can (and probably should) actually be implemented as part of the ML process26. However, many techniques are fine with more predictors than observations, even some still do well if many of them are irrelevant.

One can implement a variety of such approaches in ML to create additional potentially relevant features, as well, even automatically. As a reminder though, a key concern is overfitting, and doing broad construction of this sort with no contextual guidance would potentially be prone to such a pitfall. In other cases, it may simply be not worth the time expense.

Discretization

While there may be some contextual exceptions to the rule, it is generally a pretty bad idea in standard statistical modeling to discretize/categorize continuous variables27. However, some ML procedures will work better (or just notably faster) if dealing with discrete valued predictors rather than continuous. Others even require them; for example, logic regression needs binary input. While one could pick arbitrary intervals and cut-points in an unsupervised fashion, such as picking equal range bins or equal frequency bins, there are supervised approaches that will use the information in the data to produce some ‘optimal’ discretization.

It’s generally not a good idea to force things in data analysis, and given that a lot of data situations will contain mixed data types, it seems easier to simply apply some scaling to preserve the inherent relationships in the data. Again though, if one has only a relative few continuous variables, or a context in which it makes sense to, it’s probably better to leave continuous variables as such.

Model Selection

With data prepared and ready to analyze, one can use a validation process to come up with a viable model. Use an optimization procedure or a simple grid search over a set of specific values to examine models at different tuning parameters. Perhaps make a finer search once an initial range of good performing values is found, though one should not split hairs over arbitrarily close performance. Select a ‘best’ model given some criterion such as overall accuracy, or if concerned about over fitting, select the simplest model within one standard error of the accuracy of the best, or perhaps the simplest within X% of the best model. For highly skewed classes, one might need to use a different measure of performance besides accuracy, as simply guessing the most common category could lead to very high accuracy. If one has a great many predictor variables, one may use the model selection process to select features that are ‘most important’.

Model Assessment

With tuning parameters/features chosen, we then examine performance on the independent test set (or via some validation procedure). For classification problems, consider other statistics besides accuracy as measures of performance, especially if classes are unbalanced. Consider other analytical techniques that are applicable and compare performance among the different approaches. One can even combine disparate models’ predictions to possibly create an even better classifier28.

Regression

In typical model comparison within the standard linear model framework, there are a number of ways in which we might assess performance across competing models. For standard OLS regression we might examine adjusted-\(R^2\) or a statistical test with nested models, or with the generalized linear models we might pick a model with the lowest AIC29. As we have already discussed, in the machine learning context we are interested in models that reduce e.g. squared error loss (regression) or misclassification error (classification). However, in dealing with many models some differences in performance may be arbitrary.

Beyond Classification Accuracy: Other Measures of Performance

In typical classification situations we are interested in overall accuracy. There are situations however, not uncommon, in which simple accuracy isn’t a good measure of performance. As an example, consider the prediction of the occurrence of a rare disease. Guessing a non-event every time might result in 99.9% accuracy, but that isn’t how we would prefer to go about assessing some classifier’s performance. To demonstrate other sources of classification information, we will use the following 2x2 table that shows values of some binary outcome (0 = non-event, 1 = event occurs) to the predictions made by some model for that response (arbitrary model). Both a table of actual values, often called a confusion matrix30, and an abstract version are provided.

|

|

True Positive, False Positive, True Negative, False Negative: Above, these are A, B, D, and C respectively.

Accuracy: Number of correct classifications out of all predictions ((A+D)/Total). In the above example this would be (41+13)/91, about 59%.

Error Rate: 1 - Accuracy.

Sensitivity: is the proportion of correctly predicted positives to all true positive events: A/(A+C). In the above example this would be 41/57, about 72%. High sensitivity would suggest a low type II error rate (see below), or high statistical power. Also known as true positive rate.

Specificity: is the proportion of correctly predicted negatives to all true negative events: D/(B+D). In the above example this would be 13/34, about 38%. High specificity would suggest a low type I error rate (see below). Also known as true negative rate.

Positive Predictive Value (PPV): proportion of true positives of those that are predicted positives: A/(A+B). In the above example this would be 41/62, about 66%.

Negative Predictive Value (NPV): proportion of true negatives of those that are predicted negative: D/(C+D). In the above example this would be 13/29, about 45%.

Precision: See PPV.

Recall: See sensitivity.

Lift: Ratio of positive predictions given actual positives to the proportion of positive predictions out of the total: (A/(A+C))/((A+B)/Total). In the above example this would be (41/(41+16))/((41+21)/(91)), or 1.06.

F Score (F1 score): Harmonic mean of precision and recall: 2*(Precision*Recall)/(Precision+Recall). In the above example this would be 2*(.66*.72)/(.66+.72), about 0.69.

Type I Error Rate (false positive rate): proportion of true negatives that are incorrectly predicted positive: B/(B+D). In the above example this would be 21/34, about 62%. Also known as alpha.

Type II Error Rate (false negative rate): proportion of true positives that are incorrectly predicted negative: C/(C+A). In the above example this would be 16/57, about 28%. Also known as beta.

False Discovery Rate: proportion of false positives among all positive predictions: B/(A+B). In the above example this would be 21/62, about 34%. Often used in multiple comparison testing in the context of ANOVA.

Phi coefficient: A measure of association: (A*D - B*C)/(sqrt((A+C)*(D+B)*(A+B)*(D+C))). In the above example this would be 0.11.

Note the following summary of several measures where \(N_+\) and \(N_-\) are the total true positive values and total true negative values respectively, and \(T_+\), \(F_+\), \(T_-\) and \(F_-\) are true positive, false positive, etc.:

| Actual = 1 | Actual = 0 | |

|---|---|---|

| Predicted = 1 | T+/N+ = TPR = sensitivity =recall | F+/N- = Type I |

| Predicted = 0 | F-/N+ = Type II | T-/N+ = TNR = specificity |

There are many other measures such as area under a Receiver Operating Curve (ROC), odds ratio, and even more names for some of the above. The gist is that given any particular situation you might be interested in one or several of them, and it would generally be a good idea to look at a few.

The Dataset

We will use the wine data set from the UCI Machine Learning data repository. The goal is to predict wine quality, of which there are 7 values (integers 3-9). We will turn this into a binary classification task to predict whether a wine is ‘good’ or not, which is arbitrarily chosen as 6 or higher. After getting the hang of things, you might redo the analysis as a multiclass problem or even toy with regression approaches, just note there are very few 3s or 9s so you really only have 5 values to work with. The original data along with detailed description can be found here, but aside from quality it contains predictors such as residual sugar, alcohol content, acidity and other characteristics of the wine31.

The original data is separated into white and red data sets. I have combined them and created additional variables: color and its binary version white indicating whether it is a white wine, and good, indicating scores greater than or equal to 6 (denoted as ‘Good’, else ‘Bad’). Feel free to inspect the data a little bit before moving on32.

wine = read.csv('data/wine.csv')R Implementation

I will use the caret package in R. It makes implementation of validation, data partitioning, performance assessment, prediction and other procedures about as easy as it can be. However, caret is mostly using other R packages for the modeling functions underlying the process, and those should be investigated for additional information. Check out the caret home page for more detail. The methods selected here were chosen for breadth of approach, in order to give a good sense of the variety of techniques available. In what follows, the associated packages and functions used are:

- glmnet: glmnet

- class: knn

- caret: avNNet

- randomForest: randomForest

- e1071: svm

In addition to caret, it’s a good idea to use your computer’s resources as much as possible, or some of these procedures may take a notably long time, and more so with the more data you have. The caret package will do this behind the scenes, but you first need to set things up. Say, for example, you have a quad core processor, meaning your processor has four cores essentially acting as independent CPUs. You can set up R for parallel processing, then run caret functions as you normally would. The following code demonstrates how, but see this for details.

library(doParallel)

cl = makeCluster(7)

registerDoParallel(cl)

# All subsequent models are then run in parallel

model = train(y ~ ., data = training, method = 'rf')Feature Selection & The Data Partition

This data set is large enough to leave a holdout sample, allowing us to initially search for the best of a given modeling approach over a grid of tuning parameters specific to the technique. To iterate previous discussion, we don’t want test performance contaminated with the tuning process. With the best model at \(t\) tuning parameter(s), we will assess performance with prediction on the holdout set.

I also made some decisions to deal with the notable collinearity in the data, which can severely hamper some methods. We can look at the simple correlation matrix to start (hover for values).

I ran regressions to examine the R2 for each predictor in a model as if it were the dependent variable predicted by the other input variables. The highest was for density at over 96%33, and further investigation suggested color and sulfur dioxide are largely captured by the other variables already also. These will not be considered in the following models.

Caret has its own partitioning function we can use here to separate the data into training and test data. There are 6497 total observations, of which I will put 80% into the training set. The function createDataPartition will produce indices to use as the training set. In addition to this, we will standardize the continuous variables to have a mean of zero and standard deviation of one. For the training data set, this will be done as part of the training process, so that any subsets under consideration are scaled separately, but for the test set caret will do it automatically.

library(caret)

set.seed(1234) # so that the indices will be the same when re-run

trainIndices = createDataPartition(wine$good, p=.8, list=F)

wine_train = wine %>%

select(-free.sulfur.dioxide, -density, -quality, -color, -white) %>%

slice(trainIndices)

wine_test = wine %>%

select(-free.sulfur.dioxide, -density, -quality, -color, -white) %>%

slice(-trainIndices)Let’s take an initial peek at how the predictors separate on the target. In the following I’m ‘predicting’ the pre-possessed data so as to get the transformed data. Again, we’ll leave the preprocessing to the training process eventually, but here it will put them on the same scale for visual display.

wine_trainplot = select(wine_train, -good) %>%

preProcess(method='range') %>%

predict(newdata= select(wine_train, -good))

## featurePlot(wine_trainplot, wine_train$good, 'box')For the training set, it looks like alcohol content34 and volatile acidity separate most with regard to the ‘good’ class. While this might give us some food for thought, note that the figure does not give insight into interaction or nonlinear effects, which some methods we use will explore.

Regularized Regression

We discussed regularized regression previously, and can start with such models. This allows us to begin with the familiar (logistic) regression model we’re used to, while incorporating concepts and techniques from the machine learning world.

In this example, we’ll use the glmnet package, which will allow for a mix of lasso and ridge regression approaches. Thus, we’ll have a penalty based on both absolute and squared values of the coefficients. In this case, if we set the mixing parameter alpha to 1, we have your standard lasso penalty, and 0 would be the ridge penalty. We have another parameter, lambda, which controls the penalty amount.

You may be thinking- what should alpha and lambda be set to? They are examples of tuning parameters, for which we have no knowledge about their value without doing some initial digging. As such we will select their values as part of the validation process35.

The caret package provides several techniques for validation such as \(k\)-fold, bootstrap, leave-one-out and others. We will use 10-fold cross validation. We will also set up a range of values for alpha and lambda. However, note that caret also has ways to search the space automatically. One argument, tuneLength, will create a grid of that number of values for each tuning parameter (e.g. tuneLength=3 for two parameters would create a 9x2 grid as we do below). In addition, there is a search argument for trainControl that can search the parameter space randomly but create no more values than what is specified by tuneLength.

cv_opts = trainControl(method='cv', number=10)

regreg_opts = expand.grid(.alpha = seq(.1, 1, length = 5),

.lambda = seq(.1, .5, length = 5))

results_regreg = train(good~.,

data=wine_train,

method = "glmnet",

trControl = cv_opts,

preProcess = c("center", "scale"),

tuneGrid = regreg_opts)glmnet

5199 samples

9 predictor

2 classes: 'Bad', 'Good'

Pre-processing: centered (9), scaled (9)

Resampling: Cross-Validated (10 fold)

Summary of sample sizes: 4680, 4679, 4679, 4679, 4679, 4679, ...

Resampling results across tuning parameters:

alpha lambda Accuracy Kappa

0.100 0.1 0.7268688 0.3528105028

0.100 0.2 0.6935995 0.2373904919

0.100 0.3 0.6720595 0.1487924478

0.100 0.4 0.6497426 0.0644957753

0.100 0.5 0.6368550 0.0159741918

0.325 0.1 0.7151424 0.3159373449

0.325 0.2 0.6701339 0.1370788016

0.325 0.3 0.6330066 0.0002775041

0.325 0.4 0.6330066 0.0000000000

0.325 0.5 0.6330066 0.0000000000

0.550 0.1 0.7037933 0.2757011572

0.550 0.2 0.6330066 0.0000000000

0.550 0.3 0.6330066 0.0000000000

0.550 0.4 0.6330066 0.0000000000

0.550 0.5 0.6330066 0.0000000000

0.775 0.1 0.6891786 0.2192358192

0.775 0.2 0.6330066 0.0000000000

0.775 0.3 0.6330066 0.0000000000

0.775 0.4 0.6330066 0.0000000000

0.775 0.5 0.6330066 0.0000000000

1.000 0.1 0.6620551 0.1130690396

1.000 0.2 0.6330066 0.0000000000

1.000 0.3 0.6330066 0.0000000000

1.000 0.4 0.6330066 0.0000000000

1.000 0.5 0.6330066 0.0000000000

Accuracy was used to select the optimal model using the largest value.

The final values used for the model were alpha = 0.1 and lambda = 0.1.The results suggest \(\alpha\) = 0.1 and \(\lambda\) = 0.1. Do not mistake these for truth! However, we have to make a decision, and this at least provides us the means. The additional column, \(\kappa\) (kappa), can be seen as a measure of agreement between predictions and true values, but corrected for chance agreement. It suggests several settings would result in models that do not do any better than chance. Here is visual display of the results.

ggplot(results_regreg)ggplot(results_regreg) +

theme_trueMinimal() With the tuning parameters in place, now let’s see the results on the test set. The predict function here will automatically use the best \(\alpha\) and \(\lambda\) from the previous process.

preds_regreg = predict(results_regreg, wine_test)

good_observed = wine_test$good

confusionMatrix(preds_regreg, good_observed, positive='Good')Confusion Matrix and Statistics

Reference

Prediction Bad Good

Bad 197 76

Good 279 746

Accuracy : 0.7265

95% CI : (0.7014, 0.7506)

No Information Rate : 0.6333

P-Value [Acc > NIR] : 6.665e-13

Kappa : 0.3531

Mcnemar's Test P-Value : < 2.2e-16

Sensitivity : 0.9075

Specificity : 0.4139

Pos Pred Value : 0.7278

Neg Pred Value : 0.7216

Prevalence : 0.6333

Detection Rate : 0.5747

Detection Prevalence : 0.7897

Balanced Accuracy : 0.6607

'Positive' Class : Good

We get a lot of information here, but to focus on accuracy, we get around 72.7%. Note that this is performance on the test set, i.e. data that wasn’t used to train the model. The lower bound (and p-value) suggests we are statistically predicting better than the No Information Rate (i.e., just guessing the more prevalent ‘Bad’ category), and sensitivity and positive predictive power are good, though at the cost of being able to distinguish bad wine. The other metrics not previously discussed are as follows:

- Kappa: a chance-corrected measure of agreement between predictions and true values.

- Mcnemar’s test: this is actually just Mcnemar’s test on the confusion matrix; like a Chi-square for paired data.

- Prevalence: how often does the event occur (A+C)/Total

- Detection Rate: A/Total

- Detection Prevalence: How often the positive class was predicted (A+B)/Total

- Balanced Accuracy: The mean of sensitivity and specificity.

In addition, one can get the precision/recall form of the output by changing the mode for the confusion matrix.

confusionMatrix(preds_regreg, good_observed, positive='Good', mode='prec_recall')Confusion Matrix and Statistics

Reference

Prediction Bad Good

Bad 197 76

Good 279 746

Accuracy : 0.7265

95% CI : (0.7014, 0.7506)

No Information Rate : 0.6333

P-Value [Acc > NIR] : 6.665e-13

Kappa : 0.3531

Mcnemar's Test P-Value : < 2.2e-16

Precision : 0.7278

Recall : 0.9075

F1 : 0.8078

Prevalence : 0.6333

Detection Rate : 0.5747

Detection Prevalence : 0.7897

Balanced Accuracy : 0.6607

'Positive' Class : Good

Perhaps the other approaches that follow will have more success, but note that the caret package does have the means to focus on other metrics during the training process besides accuracy, such as sensitivity, which might help. Also, feature combination or other avenues might help improve the results as well.

Additional information from this modeling process reflects the importance of predictors. For most methods accessed by caret, the default variable importance metric regards the area under the curve or AUC from a ROC analysis with regard to each predictor, and is model independent. This is then normalized so that the least important is 0 and most important is 10036. For this approach, i.e. with glmnet, it merely reflects the absolute value of the coefficients, which is okay since we scaled the data as part of the process.

Strengths & Weaknesses

Strengths

- Intuitive approach. In the end, it’s still just a standard regression model you’re already familiar with.

- Widely used for many problems. Would be fine to use in any setting you would use standard/logistic regression.

Weaknesses

- Does not automatically seek out interactions and non-linearity, and as such will generally not be as predictive as other techniques.

- Variables have to be scaled or results will just be reflective of data types.

- May have issues with correlated predictors

Final Thoughts

Incorporating regularization would be fine as your default method, and something to strongly consider. Furthermore, these approaches will have better prediction on new data than their standard complements. As such they are a nice balance between staying interpretable while enhancing predictive capability. However, in general they are not going to be as strong of a method as others in the ML universe, and possibly not even competitive without a lot of feature engineering. If prediction is all you care about for a particular modeling setting, you’ll want to try something else.

\(k\)-nearest Neighbors

Consider the typical distance matrix37 that is often used for cluster analysis of observations38. If we choose something like Euclidean distance as a metric, each point in the matrix gives the value of how far an observation is from some other, given their respective values on a set of variables.

K-nearest neighbors approaches exploit this information for predictive purposes. Let us take a classification example, and \(k = 5\) neighbors. For a given observation \(x_i\), find the 5 closest neighbors in terms of Euclidean distance based on the predictor variables. The class that is predicted is whatever class the majority of the neighbors are labeled as39. For continuous outcomes, we might take the mean of those neighbors as the prediction.

In this scenario, the number of neighbors \(k\) will be the tuning parameter, and so we will have a set of values for \(k\) to try out40. The workhorse function under the hood originally comes from the highly descriptively named class package, authored by the well-known country music duo Venables & Ripley.

knn_opts = data.frame(k=c(seq(3, 11, 2), 25, 51, 101)) # odd to avoid ties

results_knn = train(good~.,

data=wine_train,

method='knn',

preProcess=c('center', 'scale'),

trControl=cv_opts,

tuneGrid = knn_opts)

results_knnk-Nearest Neighbors

5199 samples

9 predictor

2 classes: 'Bad', 'Good'

Pre-processing: centered (9), scaled (9)

Resampling: Cross-Validated (10 fold)

Summary of sample sizes: 4680, 4679, 4679, 4679, 4679, 4679, ...

Resampling results across tuning parameters:

k Accuracy Kappa

3 0.7536051 0.4609387

5 0.7443747 0.4359164

7 0.7505278 0.4479179

9 0.7487971 0.4432620

11 0.7551403 0.4575305

25 0.7528411 0.4504282

51 0.7493703 0.4368867

101 0.7445618 0.4207894

Accuracy was used to select the optimal model using the largest value.

The final value used for the model was k = 11.In this case it looks like choosing the nearest 11 neighbors (\(k=\) 11) works best in terms of accuracy. Now that \(k\) is chosen, let’s see how well the model performs on the test set.

preds_knn = predict(results_knn, wine_test)

confusionMatrix(preds_knn, good_observed, positive='Good')Confusion Matrix and Statistics

Reference

Prediction Bad Good

Bad 271 134

Good 205 688

Accuracy : 0.7388

95% CI : (0.714, 0.7625)

No Information Rate : 0.6333

P-Value [Acc > NIR] : 3.76e-16

Kappa : 0.4195

Mcnemar's Test P-Value : 0.0001436

Sensitivity : 0.8370

Specificity : 0.5693

Pos Pred Value : 0.7704

Neg Pred Value : 0.6691

Prevalence : 0.6333

Detection Rate : 0.5300

Detection Prevalence : 0.6880

Balanced Accuracy : 0.7032

'Positive' Class : Good

Strengths & Weaknesses

Strengths41

- Intuitive approach.

- Robust to outliers on the predictors.

Weaknesses

- Susceptible to irrelevant features.

- Susceptible to correlated inputs.

- Ability to handle data of mixed types.

- Notably imbalanced target labels.

- Big data. Though approaches are available that help in this regard.

Final Thoughts

To be perfectly honest, knn approaches have mostly fallen out of favor, due to the above issues and their relatively poor predictive capability in common settings. However, they are conceptually simple, and thus a good way to start getting used to machine learning approaches, as knn regression doesn’t take one too far afield from what they already know (i.e. regression, distance matrix/clustering).

Neural Networks

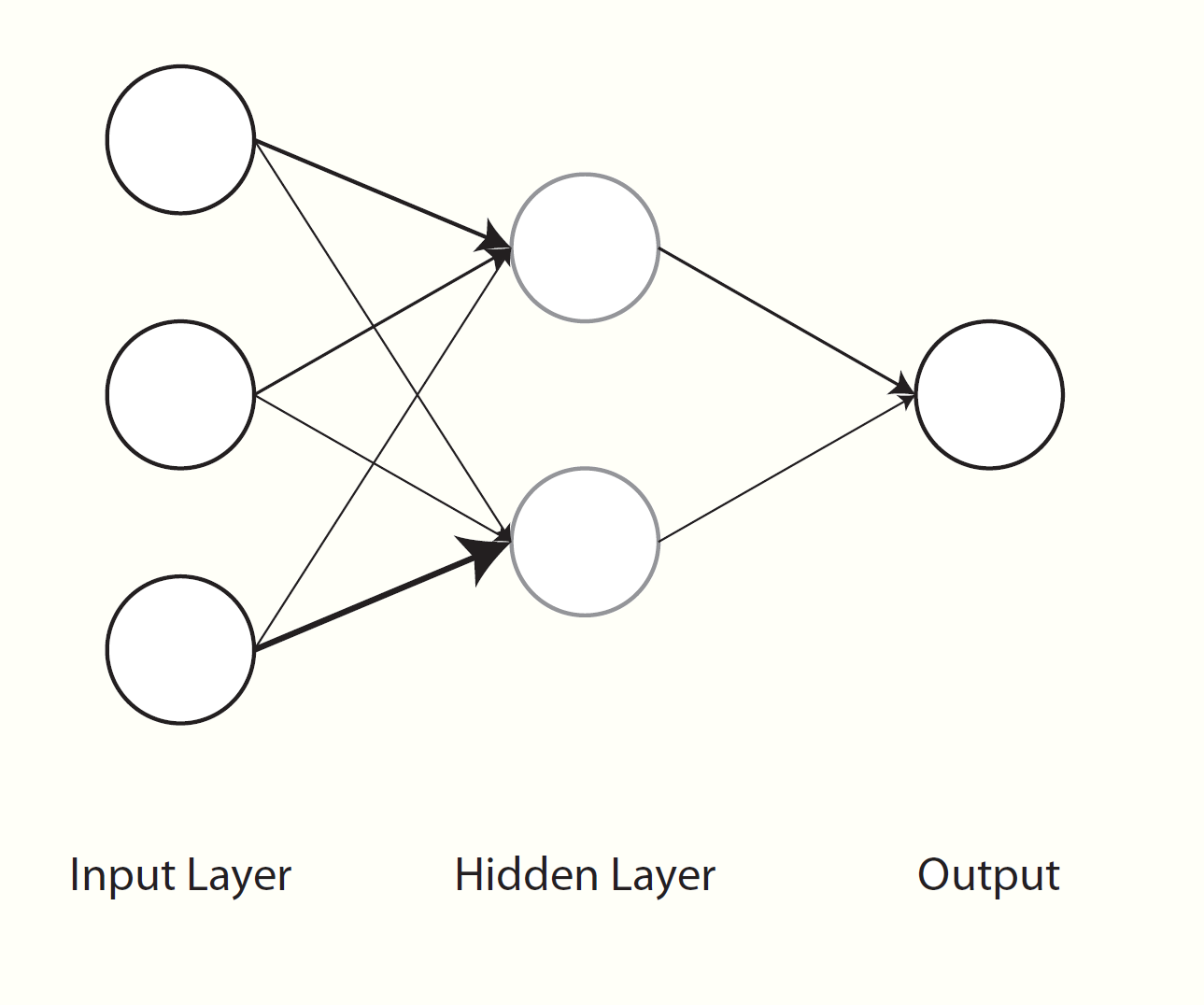

Neural networks have been around for a long while as a general concept in artificial intelligence and even as a machine learning algorithm, and often work quite well. In some sense, neural networks can be thought of as nonlinear regression42. Visually however, we can see them as a graphical model with layers of inputs and outputs. Weighted combinations of the inputs are created and put through some function (e.g. the sigmoid function) to produce the next layer of inputs. This next layer goes through the same process to produce either another layer, or to predict the output, or even multiple outputs, which serves as the final layer. All the layers between the input and output are usually referred to as hidden layers. If there were a single hidden layer with a single unit and no transformation, then it becomes the standard regression problem.

One of the issues with neural nets is determining how many hidden layers and how many hidden units in a layer. Overly complex neural nets will suffer from high variance will thus be less generalizable, particularly if there is less relevant information in the training data. Another notion is that of weight decay, however this tied to the same concept as regularization, which we discussed in a previous section, where a penalty term would be applied to a norm of the weights. Another regularization technique is dropout, where either observed or hidden units are randomly removed, and results averaged from the subsequent models.

A comment about the following: if you are not set up for utilizing multiple processors the following will be relatively slow. You can replace method = 'avNNet', from the caret package, with method = 'nnet' from the nnet package, and shorten tuneLength = 3, which will be faster without much loss of accuracy. Also, the function we’re using has only one hidden layer, but the other neural net methods accessible via the caret package may allow for more, though the gains in prediction with additional layers are likely to be modest relative to complexity and computational cost. In addition, if the underlying function43 has additional arguments, you may pass those on in the train function itself. Here I am increasing the maxit, or maximum iterations, argument.

results_nnet = train(good~.,

data=wine_train,

method='avNNet',

trControl=cv_opts,

preProcess=c('center', 'scale'),

tuneLength=5,

trace=F,

maxit=1000)

results_nnetModel Averaged Neural Network

5199 samples

9 predictor

2 classes: 'Bad', 'Good'

Pre-processing: centered (9), scaled (9)

Resampling: Cross-Validated (10 fold)

Summary of sample sizes: 4680, 4679, 4679, 4679, 4679, 4679, ...

Resampling results across tuning parameters:

size decay Accuracy Kappa

1 0e+00 0.7418758 0.4281475

1 1e-04 0.7418758 0.4281475

1 1e-03 0.7422604 0.4291346

1 1e-02 0.7420674 0.4290125

1 1e-01 0.7409135 0.4272660

3 0e+00 0.7564883 0.4601712

3 1e-04 0.7561070 0.4607032

3 1e-03 0.7582201 0.4652806

3 1e-02 0.7564905 0.4611899

3 1e-01 0.7593781 0.4670304

5 0e+00 0.7674557 0.4825182

5 1e-04 0.7622590 0.4742432

5 1e-03 0.7634147 0.4768157

5 1e-02 0.7620711 0.4743422

5 1e-01 0.7609150 0.4715354

7 0e+00 0.7714979 0.4965133

7 1e-04 0.7688004 0.4907053

7 1e-03 0.7637997 0.4782126

7 1e-02 0.7678400 0.4885388

7 1e-01 0.7607183 0.4712326

9 0e+00 0.7620574 0.4608267

9 1e-04 0.7755352 0.5048095

9 1e-03 0.7695685 0.4919259

9 1e-02 0.7701484 0.4935035

9 1e-01 0.7632264 0.4786383

Tuning parameter 'bag' was held constant at a value of FALSE

Accuracy was used to select the optimal model using the largest value.

The final values used for the model were size = 9, decay = 1e-04 and bag = FALSE.We see that the best model has 9 hidden layer nodes and a decay parameter of 10^{-4}. Here is a visual display of those results.

ggplot(results_nnet)ggplot(results_nnet) +

theme_trueMinimal() +

labs(x='Number of Hidden Units') +

scale_x_continuous(breaks = c(1,3,5,7,9))Typically, you might think of how many hidden units you want to examine in terms of the amount of data you have (i.e. estimated parameters to N ratio), and here we have a decent amount of data. In this situation, you might start with very broad values for the number of inputs (e.g. a sequence by 10s) and then narrow your focus (e.g. between 20 and 30s)44, but with at least some weight decay you should be able to avoid overfitting. I was able to get an increase in test accuracy of about 1.5% using up to 50 hidden units.

preds_nnet = predict(results_nnet, wine_test)

confusionMatrix(preds_nnet, good_observed, positive='Good')Confusion Matrix and Statistics

Reference

Prediction Bad Good

Bad 288 114

Good 188 708

Accuracy : 0.7673

95% CI : (0.7434, 0.7901)

No Information Rate : 0.6333

P-Value [Acc > NIR] : < 2.2e-16

Kappa : 0.4821

Mcnemar's Test P-Value : 2.661e-05

Sensitivity : 0.8613

Specificity : 0.6050

Pos Pred Value : 0.7902

Neg Pred Value : 0.7164

Prevalence : 0.6333

Detection Rate : 0.5455

Detection Prevalence : 0.6903

Balanced Accuracy : 0.7332

'Positive' Class : Good

We note improved prediction with the neural net model relative to the previous approaches, with increases in accuracy (76.7%), sensitivity, specificity etc.

Strengths & Weaknesses

Strengths

- Good prediction generally.

- Incorporating the predictive power of different combinations of inputs.

- Some tolerance to correlated inputs.

Weaknesses

- Susceptible to irrelevant features.

- Not robust to outliers.

- For traditional implementations, big data with complex models.

Final Thoughts

As a final note, to say that neural networks have undergone a resurgence over the past 10 years is a gross understatement. Practically everything you’re hearing about artificial intelligence and deep learning can be translated to ‘complicated neural networks’. Neural networks are so ubiquitous, the inevitable backlash has now begun, where some are questioning whether they can be taken any further. In any event, you should be familiar with them. A nice visualization of how neural networks work can be found at Chris Colah’s blog. I talk a little about deep learning in the next section, and there is some demo code in the appendix.

Trees & Forests

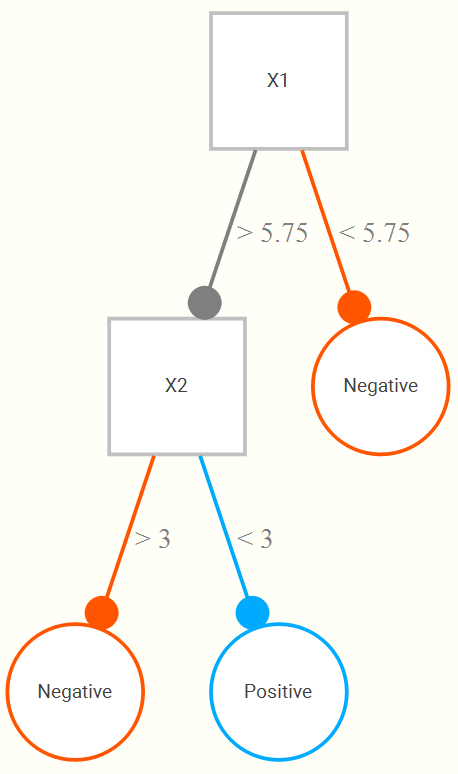

Classification and regression trees provide yet another and notably different approach to prediction. Consider a single input variable and binary dependent variable. We will start by searching all values of the input to find a point where, if we partition the data at that point, it will lead to the best classification accuracy. So, for a single variable whose range might be 1 to 10, we find that a cut at 5.75 results in the best classification if all observations greater than or equal to 5.75 are classified as positive, and the rest negative. This general approach is fairly straightforward and conceptually easy to grasp, and it is because of this that tree approaches are appealing.

Now let’s add a second input, also on a 1 to 10 range. We now might find that even better classification results if, upon looking at the portion of data regarding those greater than or equal to 5.75, that we only classify positive if they are also less than 3 on the second variable. The following is a hypothetical tree reflecting this.

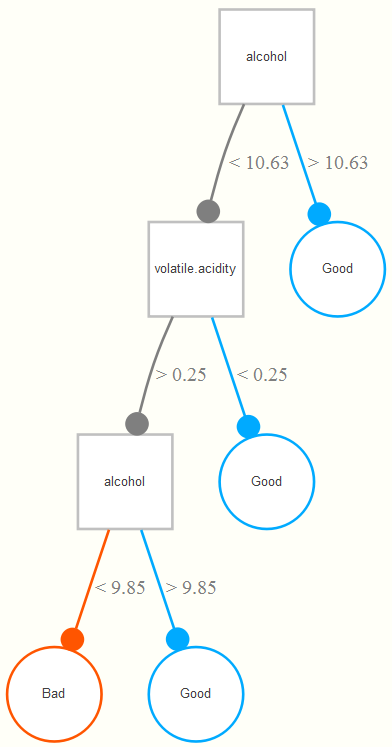

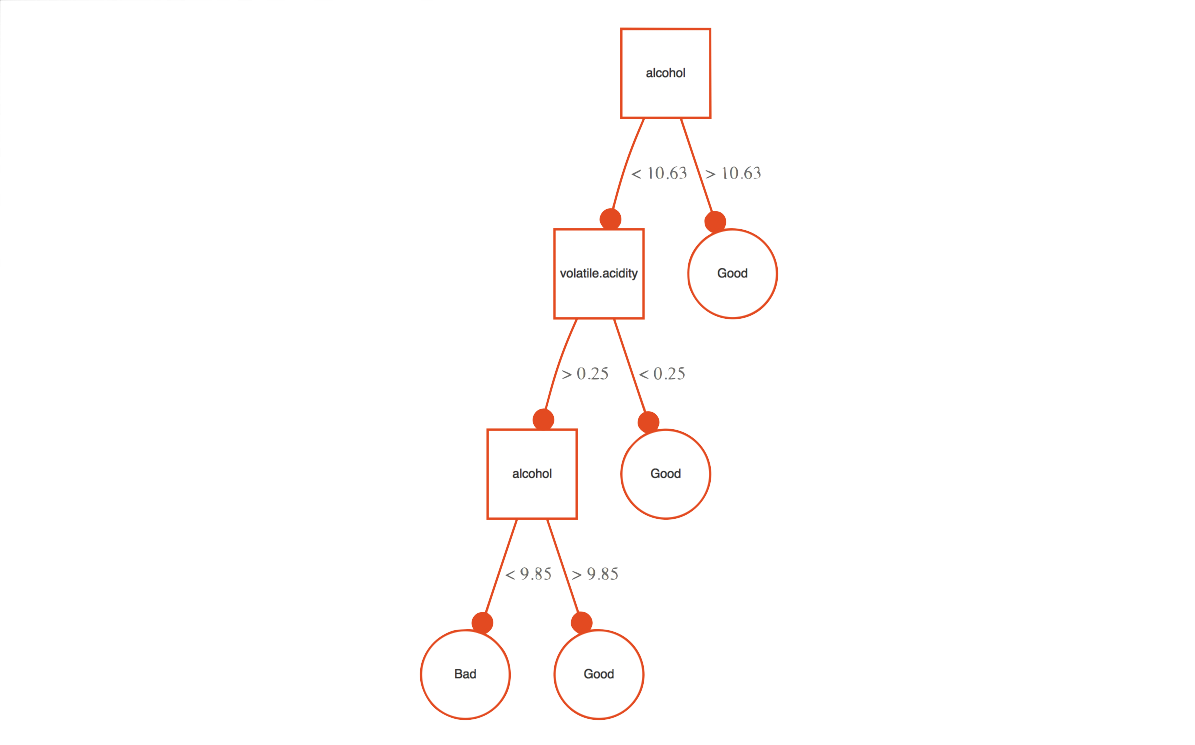

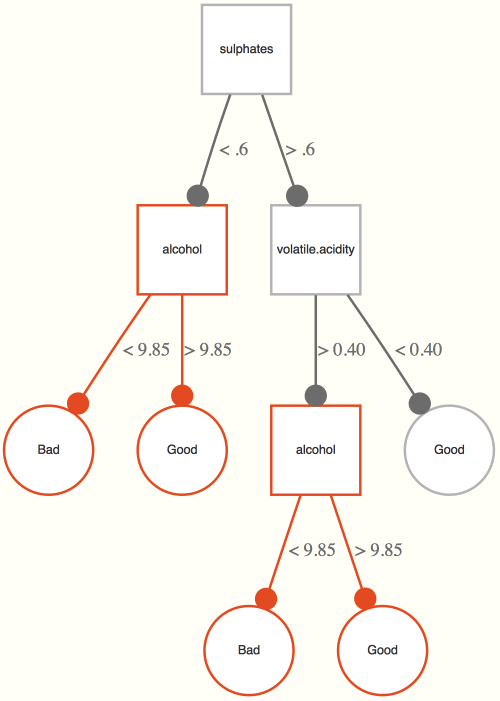

Now let’s see what kind of tree we might come up with for our data. The example tree here is based on the wine training data set. It is interpreted as follows. If the alcohol content is greater than 10.63%, a wine is classified as good. For those less than 10.63, if its volatile acidity is also less than .25, they are also classified as good, and of the remaining observations, if they are at least more than 9.85% in alcohol content (i.e. volatility >.25, alcohol between 9.85 and 10.63), they also get classified as good. Any remaining observations are classified as bad wines. In this way, the classification tree is getting at interactions among the variables involved- volatile acidity interacts with alcohol in predicting whether the wine is good or not.

Unfortunately, a single tree, while highly interpretable, does poorly for predictive purposes. In standard situations, we will instead use the power of many trees, i.e. a random forest, based on repeated sampling of the original data. So if we create 1000 new training data sets based on random samples of the original data (each of size N, i.e. a bootstrap of the original data set), we can run a tree for each, and assess the predictions each tree would produce for the observations for a hold-out set (or simply those observations which weren’t selected during the sampling process, the ‘out-of-bag’ sample), in which the new data is ‘run down the tree’ to obtain predictions. The final class prediction for an observation is determined by majority vote across all trees.

Random forests are referred to as an ensemble method, one that is actually a combination of many models, and there are others we’ll mention later. There are other things to consider, such as how many variables to make available for consideration at each split, and this is the tuning parameter of consequence here (the argument mtry). In this case we will investigate subsets of 2 through 6 possible predictors. With this value determined via cross-validation, we can apply the best approach to the hold out test data set.

There’s a lot going on here to be sure: there is a sampling process for cross-validation, there is re-sampling to produce the forest, there is random selection of mtry predictor variables, etc. But in the end, we are just harnessing the power of many trees, any one of which would be highly interpretable. In the following, the randomForest package does the work.

rf_opts = data.frame(mtry=c(2:6))

results_rf = train(good~.,

data = wine_train,

method = 'rf',

preProcess = c('center', 'scale'),

trControl = cv_opts,

tuneGrid = rf_opts,

localImp = T,

ntree=1000)Random Forest

5199 samples

9 predictor

2 classes: 'Bad', 'Good'

Pre-processing: centered (9), scaled (9)

Resampling: Cross-Validated (10 fold)

Summary of sample sizes: 4680, 4679, 4679, 4679, 4679, 4679, ...

Resampling results across tuning parameters:

mtry Accuracy Kappa

2 0.8315083 0.6287923

3 0.8297761 0.6259698

4 0.8286218 0.6237217

5 0.8278526 0.6227583

6 0.8251581 0.6168325

Accuracy was used to select the optimal model using the largest value.

The final value used for the model was mtry = 2.The initial results look promising with mtry = 2 producing the best initial result. Now for application to the test set.

preds_rf = predict(results_rf, wine_test)

confusionMatrix(preds_rf, good_observed, positive='Good')Confusion Matrix and Statistics

Reference

Prediction Bad Good

Bad 331 95

Good 145 727

Accuracy : 0.8151

95% CI : (0.7929, 0.8359)

No Information Rate : 0.6333

P-Value [Acc > NIR] : < 2.2e-16

Kappa : 0.5929

Mcnemar's Test P-Value : 0.001562

Sensitivity : 0.8844

Specificity : 0.6954

Pos Pred Value : 0.8337

Neg Pred Value : 0.7770

Prevalence : 0.6333

Detection Rate : 0.5601

Detection Prevalence : 0.6718

Balanced Accuracy : 0.7899

'Positive' Class : Good

This is our best result so far with 81.5% accuracy, with a lower bound well beyond the 63% we’d have guessing. Random forests do not suffer from some of the data-specific issues that might be influencing the other approaches, such as irrelevant and correlated predictors, and furthermore benefit from the combined information of many models. Such performance increases are not a given, but random forests are generally a good method to consider given their flexibility, and will often by default do very well relative to other approaches45.

Incidentally, the underlying randomForest function here allows one to assess variable importance in a different manner46, and there are other functions used by caret that can produce their own metrics also. In this case, randomForest can provide importance based on a version of the ‘decrease in inaccuracy’ approach we talked before (as well as another index known as Gini impurity). The same two predictors are found to be most important and notably more than others- alcohol and volatile.acidity.

Understanding the Results

As we get further into black box models, it becomes harder to understand what is going on with the predictors. If our only goal is prediction, we might not care that much. However, advances continue to be made in helping us understand what’s happening under the hood. In what follows we seek to understand exactly what is going on with this model. Some of what will follow is specific to forest/tree approaches, but others are more general.

Variable Importance

VIMP

To begin, we can get variable importance metrics as we did before with the regularized regression. How might we get such a measure from a random forest? The package itself provides two different ways. From the help file:

The first measure is computed from permuting OOB (out-of-bag) data: For each tree, the prediction error on the out-of-bag portion of the data is recorded (error rate for classification, MSE for regression). Then the same is done after permuting each predictor variable (adding noise). The difference between the two are then averaged over all trees, and normalized by the standard deviation of the differences.

The second measure is the total decrease in node impurities from splitting on the variable, averaged over all trees. For classification, the node impurity is measured by the Gini index. For regression, it is measured by residual sum of squares.

So, the first compares the prediction of a variable with a random version of itself, while the second considers the error rates induced by splitting on a variable. Either way, we can then see which variables are relatively more important. The following shows the normalized result where the best predictor is given a value of 100, and the worst 0.

varImp(results_rf)| Variable | Good |

|---|---|

| alcohol | 100.00 |

| volatile.acidity | 47.36 |

| sulphates | 19.16 |

| residual.sugar | 5.34 |

| pH | 4.16 |

| total.sulfur.dioxide | 4.12 |

| chlorides | 3.90 |

| citric.acid | 3.17 |

| fixed.acidity | 0.00 |

Minimal depth

There are some issues with the basic variable importance approach outlined previously, such as being dependent on the type of prediction error used, and going much further than simply rank ordering variables. Minimal depth provides a more formal assessment based on the tree structure. Given the trees in a forest, more important variables are those that are split higher up the tree. We can essentially get the average depth at which a variable occurs across all trees.

The first thing considered are the subtrees containing splits on the variable in question. We want maximal subtrees where the variable is split. For example, in our previous example the maximal subtree for alcohol is the entire tree, and its minimal depth is 0, as it is split at the root note47.

For the following, we have two splits with alcohol in a single tree, thus there are two maximal subtrees one at depth 1 and one at depth 2. All maximal subtrees are considered for minimal depth importance.

We can use randomForestExplainer to get and plot this information, both for individual variables but also interactions of two or more variables. Note that the latter will take an inordinately long time.

library(randomForestExplainer)

plot_min_depth_distribution(results_rf$finalModel)

plot_min_depth_interactions(results_rf$finalModel, k=7) # only do 7 best interactionsWe can see at least one difference relative to the previous VIMP measures. It appears that other measures’ importance are due more to their interaction with alcohol. Here is an interactive plot of the alcohol-volatile acidity interaction, which had the minimal depth. High alcohol and low acidity are very likely to be rated ‘good’, and vice versa.

Other Measures

There are still other measures of importance we can obtain, and the following shows how to get them and plot them using randomForestExplainer. Details about the measures can be found in the appendix. As one can see, they measure different things and thus will come to different conclusions.

multi_imps = measure_importance(results_rf$finalModel)

plot_importance_ggpairs(multi_imps)In the end, you’ll have a good idea of what predictor variables are driving your result.

Effects

Visualization

So we can see that alcohol content and volatile acidity are the most important, but what is the nature of the relationship? Partial dependence plots allow us to understand the predictive effect of any particular covariate, controlling for the effects of others. This is particularly important in the case where we have nonlinear relationships as we do here.

The ggRandomForests package provides such plots, as well as other ways to explore variable importance we’ll look at. Unfortunately, despite appearances to the contrary, I could find no example that used a basic randomForest class object, and none worked with the final model, so we’ll use the randomForestSRC package it is primarily associated with. In general though, you could get something similar by using the basic predict function and a little effort.

# https://arxiv.org/pdf/1501.07196

# tibble causes problem so convert wine_train to standard df.

library(ggRandomForests)

rf2 = rfsrc(formula = good ~.,

data = data.frame(wine_train),

mtry = results_rf$finalModel$mtry)gg_v = gg_variable(rf2)

gg_md = gg_minimal_depth(rf2)

# We want the top two ranked minimal depth variables only

xvar = gg_md$topvars[1:2]

plot(gg_v, xvar=xvar, panel=TRUE, partial=TRUE, alpha=.1)We can see with alcohol that the increasing probability of a good classification with increasing alcohol content only really starts with at least alcohol of 10% and only up until about 12.5% after which it’s not going to do much better. With volatile acidity, the trend is generally negative for increasing acidity. Note that you can think of these as edge-on views of the previous 3D image.

LIME

A relatively newer approach to understanding black box methods allows us to understand effects down to specific observations. The Local Interpretable Model-agnostic Explanations (LIME) approach is applicable to any model, and the basic idea is to provide a human interpretable prediction- not just a prediction, but also provide why that prediction was made. People using the model have some idea of what’s going on in a substantive sense (i.e. domain knowledge), but they will trust the prediction of such a model if they understand the reasoning behind it. Examining this prediction reasoning at representative or otherwise key settings of the predictors allows for a more global understanding of the model as a whole.

Here is the gist of the approach (slightly more detail in [appendix][local-interpretable-model-agnostic-explanations]):

- Permute the data \(n\) times to create data with similar distributional properties to the original.

- Get similarity scores of the permuted observations to the observations you wish to explain.

- Make predictions with the permuted data based on the ML model.

- Select \(m\) features (e.g. forward selection, lasso) best describing the complex model outcome from the permuted data.

- Fit a simple model, e.g. standard regression, predicting the predictions from the ML model with the \(m\) features, where observations are weighted by similarity to the to-be-explained observations.

Perhaps if we look at the product we can get more information. We get some sample observations, create an ‘explainer’, and then explain the predictions of those observations.

set.seed(1234)

sample_index = sample(1:nrow(wine_test), 5)

sample_test = wine_test %>%

slice(sample_index) %>%

select(-good)

library(lime)

rf_lime = lime(wine_train, results_rf)

rf_explain = explain(sample_test,

rf_lime,

n_features = 3,

feature_select = 'highest_weights',

labels = 'Good')The rf_explain object is a data frame that can be explored as is, or visualized. We’ll start with the feature plot.

plot_features(rf_explain)Consider case 1. The predicted probability of the being a good wine is low at 0.42. The main reasons for this are that its volatile acidity is greater than .4, and its alcohol content is relatively low48. It’s sulphates content is actually working towards a ‘good’ classification. The weights give a us a good idea of how much the predicted probability would change if we did not have that particular feature present. The explanation fit is a measure of the fit of the simple model, basically just an R2.

Now for case 2. This is very strongly classified as a good wine, with high alcohol and low acidity. Without those, this would be a much less confident understanding of the prediction. Case three has a similar expected probability of being classified ‘good’, but the value of that observation for volatile acidity actually makes us a little less confident.

With case 4 & 5, we see an interesting situation. Case 4 has a lower probability of being good, but the explanation is relatively better. The alcohol works against a good classification in both cases. For the Case 4 though, the volatile acidity works for it almost as much. Not so for Case 5.

The following plot shows the same thing albeit more succinctly.

plot_explanations(rf_explain)I don’t use lime with the other models because the story is the pretty much the same. Now that you know what to expect, feel free to try it out yourself.

Strengths & Weaknesses

Strengths

- A single tree is highly interpretable.

- Easily incorporates features of different types (categorical and numeric).

- Tolerance to irrelevant features.

- Some tolerance to correlated inputs.

- Good with big data.

- Handling of missing values.

Weaknesses

- Few, but like all techniques, it might be relatively less predictive in certain situations.

Final Thoughts

Random forests (and similar ensemble tree methods like boosting) are one of the better off-the-shelf classifiers you’ll come across. They handle a variety of the most common data scenarios49, are relatively fast, allow for an assessment of variable importance, and just plain work. Unless you have massive amounts of data, even all the complicated neural net approaches out there will struggle to do better50 in many common data situations without far more effort.

Support Vector Machines

Support Vector Machines (SVM) will be our last example, and are perhaps the least intuitive. SVMs seek to map the input space to a higher dimension via a kernel function, and in that transformed feature space, find a hyperplane that will result in maximal separation of the data.

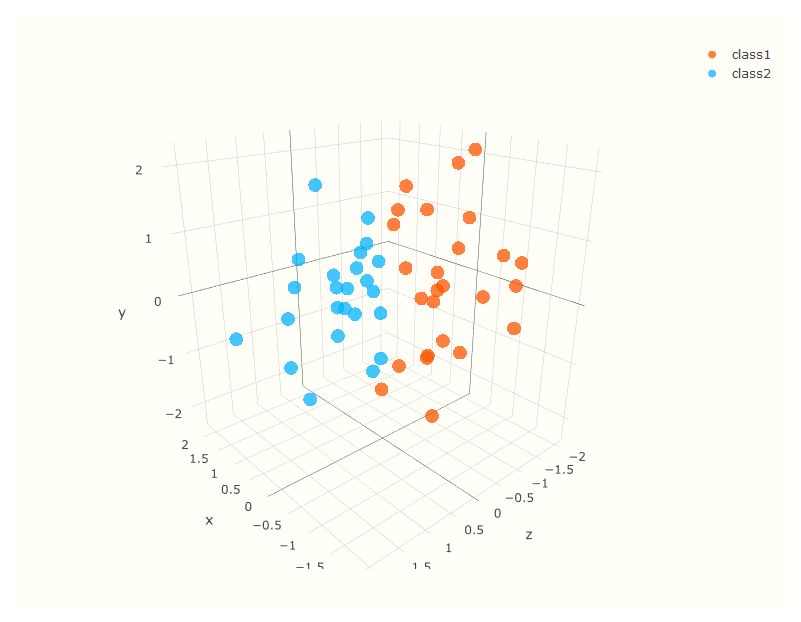

To better understand this process, consider the following image of two inputs, \(x\) and \(y\). Cursory inspection shows no easy separation between classes. However, if we can map the data to a higher dimension51, as shown in the following graph, we might find a more clear separation.

Note that there are a number of choices in regard to the kernel function that does the mapping, but in that higher dimension, the decision boundary is chosen which will result in maximum distance (largest margin) between classes. Real data will not be so clean cut, and total separation impossible, but the idea is the same.

For the following we use method = 'svmLinear2', where the underlying package is e1071. The tuning parameter is a regularization parameter applied to the cost function.

results_svm = train(good~.,

data=wine_train,

method='svmLinear2',

preProcess=c('center', 'scale'),

trControl=cv_opts,

tuneLength=5,

probability=TRUE) # to get probs along with classificationsSupport Vector Machines with Linear Kernel

5199 samples

9 predictor

2 classes: 'Bad', 'Good'

Pre-processing: centered (9), scaled (9)

Resampling: Cross-Validated (10 fold)

Summary of sample sizes: 4680, 4679, 4679, 4679, 4679, 4679, ...

Resampling results across tuning parameters:

cost Accuracy Kappa

0.25 0.7445666 0.4272628

0.50 0.7449513 0.4283748

1.00 0.7445666 0.4276426

2.00 0.7449513 0.4285039

4.00 0.7451436 0.4290000

Accuracy was used to select the optimal model using the largest value.

The final value used for the model was cost = 4.preds_svm = predict(results_svm, wine_test)

confusionMatrix(preds_svm, good_observed, positive='Good')Confusion Matrix and Statistics

Reference

Prediction Bad Good

Bad 270 123

Good 206 699

Accuracy : 0.7465

95% CI : (0.7219, 0.77)

No Information Rate : 0.6333

P-Value [Acc > NIR] : < 2.2e-16

Kappa : 0.4335

Mcnemar's Test P-Value : 6.16e-06

Sensitivity : 0.8504

Specificity : 0.5672

Pos Pred Value : 0.7724

Neg Pred Value : 0.6870

Prevalence : 0.6333

Detection Rate : 0.5385

Detection Prevalence : 0.6972

Balanced Accuracy : 0.7088

'Positive' Class : Good

Results for the initial support vector machine do not match the random forest for this data set, with accuracy of 74.7%. However, you might choose a different kernel than the linear one used here, as well as tinker with other options.

Strengths & Weaknesses

Strengths

- Good prediction in a variety of situations.

- Can utilize predictive power of linear combinations of inputs.

Weaknesses

- Very black box.

- Computational scalability.

- Natural handling of mixed data types.

Final Thoughts

Support vector machines can provide very good prediction in a variety of settings, while doing relatively poorly in others. They will often require a notable amount of tuning, whereas generalizations of the SVM would estimate such parameters as part of the process. In short, you will typically have better, more interpretable, and/or less computationally intensive options in most circumstances.

See, for example, the rescale function in the scales package.↩

For example, via principal components or partial least squares regression.↩

See Harrell (2015) for a good summary of reasons why not to.↩

The topic of ensembles is briefly noted later.↩

In situations where it is appropriate to calculate in the first place, model selection via AIC can often compare to the bootstrap and k-fold cross-validation approaches.↩

This term has always struck me as highly sub-optimal.↩

I think it would be interesting to have included characteristics of the people giving the rating.↩

Oddly, total.sulfur.dioxide is integer for all but a handful of values.↩

Some might recognize this approach. In traditional statistics settings, 1-R2 is known as tolerance, and its inverse is the variance inflation factor.↩

That alcohol content is a positive predictor of ‘quality’ is also seen with data I’ve scraped from BeerAdvocate. As such, if you are looking for a good drink via ratings, maybe temper the ratings a bit if the alcohol content is high.↩

Its now possible to use a model select the tuning parameters themselves, i.e. in which the parameters are outputs to the model, and the estimates would then be fed into the main model. Services such as Google’s CloudML already offer this, thus paving the way for data scientists to be fully automated well before truck drivers or fast food workers.↩

You can quickly obtain this plot via the dotplot function.↩

See the function dist in base R, for example.↩

Often referred to as unsupervised learning as there is no target/dependent variable. We do not cover such techniques here but they are a large part of machine learning just as they are with statistics in general.↩

See the knn.ani function in the animation package for a visual demonstration. Link to demo.↩

Note also you can just specify a tuning length instead. See the help file for the train function.↩

See table 10.1 in Hastie, Tibshirani, and Friedman (2009) for a more comprehensive list for this and other methods discussed in this section.↩

Neural nets, random forests, boosting, and additive models can all be put under the heading of basis function approaches.↩

For this example, ultimately the primary function is nnet in the nnet package that comes with base R.↩

You may find some rules of thumb from older sources, but using regularization and cross-validation is a much better way to ‘guess’.↩

In my opinion, random forests should be a baseline by which to judge new techniques. If one can’t consistently do better than an a standard random forest with default settings, there isn’t much to get excited about.↩

Our previous assessment was model independent.↩

Some might start with depth = 1.↩

I used lime’s default quantile binning approach which binarizes everything at their min, .25, .5, .75, and max quantile values. This makes for easier interpretation and comparison, but I also think that lime doesn’t evaluate the pre-processing argument of the model, and thus would be using the original data and weights that would reflect variable scales more than their importance.↩

A lot of papers testing various methods seem to avoid realistic data situations. One is never going to see data where every variable is normally or uniformly distributed, so it boggles a bit to think about why anyone would care if some technique does slightly better than a random forest under conditions that don’t exist naturally.↩

See Fernández-Delgado et al. (2014) for a recent assessment, but there have been others that come to similar conclusions.↩

Note that we regularly do this sort of thing in more mundane circumstances. For example, we map an \(\mathrm{N}\ \mathrm{x}\ \mathrm{p}\) matrix to an \(\mathrm{N}\ \mathrm{x}\ \mathrm{N}\) matrix when we compute a distance matrix for cluster analysis.↩